|

| |

System: Activity Detection |

|

|

| Sensors |

The system consists of two sensors in the environment

(a microphone and a web camera) and a sensor in the computer (a computer

activity logger). The environment sensor data are integrated using a data

collection tool that Bilge Mutlu and Ian Li developed. The processed data

from the sensors are stored in log files.

The video input for the system is the Creative Ultra NX web camera. The camera

features a wide-angle lens making it sufficient to contain a large amount

of area of the office. Compared to other web cams, the quality of its images

are very good making it ideal as a sensor.

|

| Figure1. The Creative Ultra NX web

camera |

The input from the web camera is processed by the system to compute a motion

level value between 0 and 1 inclusively. Motion value is computed as the

normalized difference between the pixels of the current image and a previous

image.

|

| Figure 2. Processing of the images

to produce a value for the motion level. |

A mini microphone is used to record the sound level in the environment. Sound

levels within intervals of a quarter of a second are averaged to give a final

value for sound level. Since sound level is the only feature recorded, the

participants' identities and topics of conversation are kept private.

|

| Figure 3. The mini microphone |

The computer activity logger uses the AmIBusy toolkit that James Fogarty

developed. The toolkit provides classes to record computer input events such

as keystrokes, mouse clicks, and mouse movements. To protect users' privacy,

the program does not record what keys are pressed, only whether the keyboard

is being used. The logger records every one-tenth of a second whether there

was computer input.

|

| Figure 4. The computer activity

logger |

|

|

|

| Data Collection |

The data from the environment sensors are integrated

together using a data collection tool that Bilge Mutlu and Ian Li developed.

The tool takes data from the sensors, processes them, and stores them in

a log file.

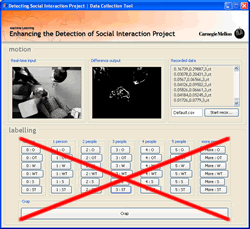

The collection tool has a user interface that allows a data collector to

log the actual state of the environment in real time. Since data was being

collected from different spaces at the same time for several hours, it is

not possible to have an observer to record the actual activities that are

happening in the space. To resolve this, the system takes snapshots of the

environment every 30 seconds. The snapshots are later labeled for activity.

|

| Figure 5. The user interface for

the data collection tool that Bilge Mutlu and Ian Li developed. The

set of buttons is not used. |

|

|

|

| Deployment |

The system was deployed to four office environments: the

offices of two professors and two Ph.D. students. The participants agreed

to be recorded throughout the day from when they arrive in their office to

when they leave.

The sensors are installed in the participant's office space. The environment

sensors (web camera and microphone) are mounted on the ceiling in the middle

of the room to capture as much of the space. The computer activity logger

is installed in the participant's computer.

|

| Figure 6. The sensors deployed in

two participant offices. |

|

|

|

| Results |

I ran various machine learning algorithms (Bayes Net, Naive

Bayes, Logistic Regression, and Bagging with reduced-error pruning trees)

on the collected features from the environment sensors. I analyzed the sets

of features for each participant and day.

|

|

|

Recall

|

| Participant |

Accuracy |

Classifier |

Outside |

Sitting |

Sit&Talk |

Walking |

|

| Prof 1 Day 1 |

88.04% |

Bagging |

0.944 |

0.877 |

0.948 |

0 |

| Prof 1 Day 2 |

92.86% |

Bayes Net |

0.657 |

0.97 |

0.986 |

0 |

| Prof 2 Day 1 |

90.03% |

Bagging |

0.829 |

0.931 |

0.923 |

0.381 |

| Prof 2 Day 2 |

87.62% |

Bagging |

0.886 |

0.898 |

no data |

0.227 |

| Student 1 |

90.85% |

Bayes Net |

0.723 |

0.972 |

0.867 |

0 |

| Student 2 |

93.08% |

Bagging |

0.88 |

0.976 |

0 |

0 |

|

| Table 1. The best detection

accuracies for each set of data (participant and day) |

The table shows that activities {outside, sitting, sitting

and talking, walking} can be accurately detected using features

from simple sensors (web camera and microphone). The detection accuracies

range from 88% to 93%. The best learning algorithms for detecting activities

were Bayes Net and Bagging with reduced-error pruning trees.

Notice that the recall of the activities, outside, sitting,

and sitting and talking, are very good. The amount of data collected

for these activities were sufficient to train the classifiers. On the other

hand, the detection of walking is very poor. Only a few algorithms were able

to detect walking, albeit those algorithms poorly detected walking.

The poor detection can be attributed to the small amount of training data

labeled walking. Another reason for the poor detection is that half

minutes sets of data were labeled. A walking activity only takes a few seconds

and thus the limitations of labeling the data hid the features of walking.

Notice that the accuracy of detection is fairly consistent across the different

participants. It might be possible that it is sufficient to train the classifier

for a day and to accurately detect activity for two days or a week. It also

might be possible that a classifier from a person can be generalized across

several people with no or minor modifications. |

|

|